Share

Business Intelligence applications pose nontrivial performance, scale, and user concurrency challenges to any platform. Traditional Data Warehousing platforms a la Teradata or Netezza use proprietary HW to address them, thus quickly becoming impractically expensive for Big Data BI. Emerging Hadoop and Cloud architectures offer the raw power and abundant storage required for the job, but is that sufficient to claim the title “Big Data Warehouse”? That is the 15 Billion dollar question…

The rapidly declining sales of Teradata, and the end-of-life of Netezza testify to the newly created gap in the BI market, yet it is not clear what vendor or which platform will fill it.

The BI straight jacket

Interactive BI is a well-established and widely common practice. A typical enterprise employs hundreds of BI applications on de facto standard BI tools like Tableau or Qlik. Thousands of data driven concurrent users are addicted to interactive BI and will not accept even the slightest reduction in service or performance. Users do not give much wiggle room to IT, modifying application screens or reorganizing data in pursuit of performance will not be tolerated. In addition, vast amounts and ever growing data and rising cost of IT talent place the Big Data Warehouse on the CIO’s top 5 list.

So that is the Big Data Warehouse straight jacket… deliver snappy performance, for thousands of users, over 10x bigger datasets, while working transparently under all existing application. The solution has also to be SELF DRIVING, no administration please. Now. Questions?

The “stupid fast” approach

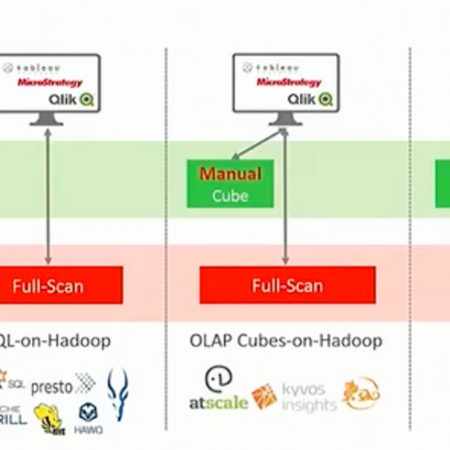

Since MPPs excel in, what else, massively parallel processing – distributing data quasi-evenly across all cluster nodes will speed up full data scans by the count of participating cluster nodes. Brilliant! At least for some batch applications like machine learning or static reports that operate on one or two passes of full data scans.

Focusing massive cluster resource on a single query has its drawbacks… Highly selective queries pertaining to small independent data subsets will still employ the whole cluster for a full data scan. Furthermore, concurrently running queries compete on all cluster nodes and thus limit the number of concurrent users. A peak in BI workload might bring other cluster applications to a screeching halt. Data partitioning may boost some queries but not help the ones concerned with non-partitioning keys. Gratuitously redundant computing will drive up operating costs on cloud platforms

Grandpa’s good old OLAP

Data aggregation is a staple of Interactive BI. It stands to reason that precompiling a set of summary tables and resolving user queries of those tables will accelerate a lion share of the workload. Tried and true! OLAP solutions offered commercially in the early 90’s did just that delivering impressive performance on small and narrow datasets for a predictable query workload. OLAP works well for financial analysis application yet falls short when applied to big data.

Selective queries, or for that matter, any query that falls out of the predefined cubes, will force a slow full data scan.

Bigger datasets and diverse applications present a more diverse query workload, rendering cube pre aggregation next to impossible. Data profiling may help improve cube coverage, yet many queries will still fall into the full data scan abyss.

BI tools like Tableau or Qlik tend to generate dynamic runtime aggregation queries that cannot be resolved by a predefined cube.

Big Data OLAP performance is spotty, posing performance “black holes” challenging risk. A “black hole” is a panel based on a query that cannot be resolved from a cube and freezing its application screen while it is resolved by a full data scan. Rearranging application screens may help, but it is very costly and disruptive.

Auto indexing and auto cubes – two strategies combined

Auto indexing stands for automatically creating an index for every column. Auto cubes are built dynamically when it can benefit an actual user query and similar future queries. Additional indexes are used to accelerate JOINS, GROUP BY, and COUNT DISTINCT queries. An optimizer picks the strategy that works best for each user query. All user queries are resolved from indexes and cubes, no full scan of the underlying data is required.

As cubes are created on demand, performance will reach its peak after a short training period. Unpopular cubes will be dropped and overlapping cubes will be merged to maintain a dynamically optimized cube set.

This solution covers the full range of queries from the selective to aggregative, posting no risk of performance “black holes”.

Doing all the heavy lifting in the background allows for a lighter workload at query time delivering snappy response times for a multitude of concurrent users.

Summary

You have to respect legacy EDWs for their performance and their ingenuity. They have defined BI and set a very high bar for their replacement. There are no shortcuts on that path, straight forward MPPs excel on full data scan batch jobs and traditional OLAP is good for predictable workloads. It will take more than the MPP divide-and-concur or the old-and-proven OLAP to stand for the too-expensive-for-its-own-good legacy EDW.