Share

A user on the Qlik blog asked the question "How do you handle 'Big Data'?"

Being a technology vendor that focuses on this exact area, I wanted to share some of our observations. I hope that my unavoidable vendor-biased perspective will be balanced with some useful info.

Most people that use BI on large datasets in Hadoop take the approach of selective extract (into a QVD or TDE) and loading into memory. The discussion here, however, is what to do when the extract and load method is not a practical option because the extract itself is still too big, the lag time is too high, or other reasons. In such case, live access to the data at its source (Direct Discovery, Live Connect) is the preferable approach.

Indeed, Tableau’s Live Connect is the more mature interface, but Qlik’s Direct Discovery can be made to overcome its key limitations and provide a similar functionality. Specifically, the integration work done by Jethro and Qlik addresses the following issues:

- Ability to map complex data model eg star schema) into Direct Discovery

- Enable in-DB functionality that emulates Qlik’s set analysis functionality and syntax

A great video by David Freriks, a Technology Evangelist on the Innovation and Design team at Qlik, demonstrating this solution:

https://www.youtube.com/watch?v=leJSWeFaWX8

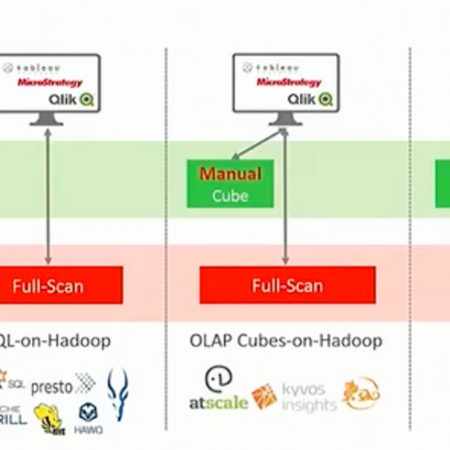

Once you are able to connect your BI tool live to your datasets in Hadoop, you’re likely to encounter the next challenge: the performance of SQL-on-Hadoop tools (eg Hive) is usually too slow for any acceptable interaction. As noted earlier, there is constant progress being made in this area and the latest versions of Impala and Hawq are significantly faster that earlier releases. Still, the architecture used by all of these tools is nearly identical: they are all MPP / full scan engines. And while this architecture was effective with hi-end appliances (eg Teradata), it is less efficient with the switch to off-the shelf hardware, especially when combined with much larger dataset sizes, and more complex workloads.

At Jethro, we address this issue by using a different architecture: full indexing. By pre-indexing all the columns, Jethro can serve typical BI queries much faster than the full-scan based engines and with significantly less cluster resources.

We have a live demo of Jethro + Qlik available at: Qlik Sense.

See original blog post and comment thread here.